Neural Networks : The Backbone of Modern AI Introduction

Neural networks have revolutionized the way machines process data, interpret patterns, and perform tasks once thought exclusive to human intelligence. Their application ranges from image recognition to language translation, and even self-driving cars. In this post, we’ll delve into the fundamentals of neural networks, their architecture, and their profound impact on technology.

What Are Neural Networks?

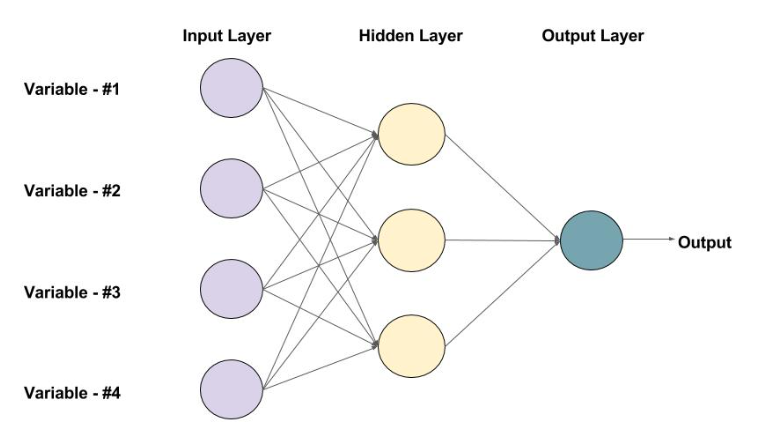

At its core, a neural network is a computational model inspired by the human brain. It consists of layers of neurons, or nodes, which process input data and produce an output. Neural networks are part of the broader family of machine learning algorithms, where computers learn patterns from data to make predictions or decisions.

Components of a Neural Network

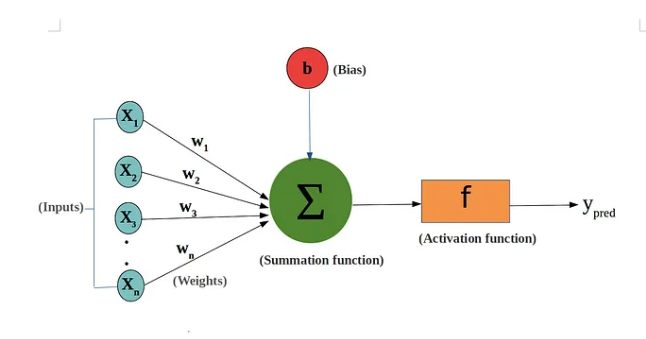

- Neurons (Nodes): Just like the neurons in our brain, these are the basic units of a neural network. Each neuron takes an input, processes it through an activation function, and produces an output that is passed to the next layer.

- Layers:

- Input Layer: This is where the network receives data. Each node in this layer corresponds to a feature in the input dataset.

- Hidden Layers: These are intermediate layers where computations are carried out. The more hidden layers a network has, the more complex the features it can capture, enabling deep learning.

- Output Layer: This produces the final result or prediction based on the inputs and the transformations in the hidden layers.

- Weights and Biases: Weights control the importance of each input, and biases help shift the output. The network adjusts these parameters during training to minimize error.

- Activation Function: This determines if a neuron should be activated. Popular activation functions include ReLU (Rectified Linear Unit), Sigmoid, and Tanh, each with its advantages depending on the task.

How Neural Networks Learn: Training with Backpropagation

Training a neural network involves feeding it data and adjusting the weights and biases through a process called backpropagation. Here’s how it works:

- Forward Propagation: The input data passes through the layers, and an output is generated.

- Loss Calculation: The difference between the predicted output and the actual output (from training data) is calculated using a loss function.

- Backward Propagation: The network adjusts its weights and biases based on the error, using an algorithm like gradient descent, to reduce the loss in subsequent iterations.

- Optimization: Over time, with repeated exposure to the data, the network improves its predictions by minimizing the error.

Types of Neural Networks

- Feedforward Neural Networks (FNNs): These are the simplest type where the data flows in one direction—from input to output. There are no cycles or loops.

- Convolutional Neural Networks (CNNs): Specialized for tasks like image recognition, CNNs use convolutional layers to detect spatial hierarchies in images, making them highly effective in visual data processing.

- Recurrent Neural Networks (RNNs): Designed for sequential data, such as time series or natural language, RNNs have loops that allow them to remember information from previous inputs, making them useful for tasks like speech recognition and translation.

- Generative Adversarial Networks (GANs): GANs consist of two neural networks, a generator and a discriminator, which work together to create realistic synthetic data, such as deepfakes or high-quality images.

Real-World Applications of Neural Networks

- Image and Speech Recognition: Neural networks power technologies like facial recognition, virtual assistants (e.g., Siri, Alexa), and autonomous vehicles by enabling computers to understand and interpret images and sounds.

- Natural Language Processing (NLP): Tools like chatbots, translation services, and even search engines rely on neural networks to comprehend human language and generate meaningful responses.

- Healthcare: Neural networks aid in diagnosing diseases, predicting patient outcomes, and analyzing medical images, revolutionizing fields like radiology and genomics.

- Finance: Neural networks are used in algorithmic trading, fraud detection, and credit scoring, where they help detect patterns that indicate market shifts or anomalies.

Challenges and Limitations

- Data Requirements: Neural networks require large amounts of labeled data to train effectively, which can be difficult to obtain in certain domains.

- Computational Power: Training deep neural networks requires significant computational resources, especially for complex tasks like image generation or natural language understanding.

- Interpretability: Neural networks are often described as “black boxes” because their decision-making process is not always transparent, making it difficult to understand why certain predictions are made.

The Future of Neural Networks

As neural networks continue to evolve, we can expect even more sophisticated AI applications in various industries. Emerging architectures, such as transformers and advancements in quantum computing, hold the promise of accelerating neural network capabilities, enabling machines to tackle increasingly complex problems.

Conclusion

Neural networks have become a foundational technology driving innovation in AI. Their ability to mimic the brain’s way of learning and processing data has opened new frontiers in everything from medicine to entertainment. While challenges remain, the future of neural networks is bright, with endless possibilities for their application in improving how machines interact with the world.