Introduction to Multivariate Statistics

In the realm of data science and statistics, the analysis often involves understanding relationships between more than two variables simultaneously. This is where multivariate statistics come into play. Multivariate statistics encompass a set of techniques used for analysis of data that contains more than one variable, allowing researchers to understand the structure and relationships within complex data sets.

What is Multivariate Statistics?

Multivariate statistics is a branch of statistics that involves observing and analyzing more than one statistical outcome variable at a time. While univariate statistics (one variable) and bivariate statistics (two variables) are common, multivariate statistics allows for a more comprehensive examination of data.

Importance of Multivariate Statistics

Multivariate techniques are essential in various fields, including:

- Medicine: For example, in clinical trials, multivariate statistics can help in understanding the effect of several treatments on different health outcomes simultaneously.

- Marketing: Understanding consumer preferences by analyzing multiple factors like age, income, and buying behavior.

- Finance: Portfolio management involves analyzing various assets to optimize investment returns.

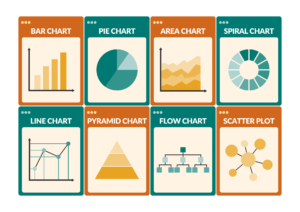

Common Multivariate Techniques

Here are some of the most commonly used multivariate techniques:

- Principal Component Analysis (PCA)

- Factor Analysis

- Cluster Analysis

- Multivariate Analysis of Variance (MANOVA)

- Canonical Correlation Analysis (CCA)

- Discriminant Analysis

1. Principal Component Analysis (PCA)

Principal Component Analysis (PCA) is a technique used to emphasize variation and bring out strong patterns in a dataset. It reduces the dimensionality of the data while retaining most of the variation in the dataset.

Example:

Consider a dataset with information on students’ scores in different subjects. Using PCA, we can reduce the number of subjects to a few principal components that explain most of the variance in the scores.

2. Factor Analysis

Factor Analysis is used to identify underlying relationships between variables. It assumes that observed variables are influenced by some underlying factors.

Example:

In psychology, researchers might use factor analysis to identify latent variables (factors) such as intelligence, anxiety, or depression from a set of observed variables (test scores, survey responses).

3. Cluster Analysis

Cluster Analysis groups a set of objects in such a way that objects in the same group are more similar to each other than to those in other groups.

Example:

In marketing, cluster analysis can be used to segment customers into different groups based on purchasing behavior.

4. Multivariate Analysis of Variance (MANOVA)

MANOVA is an extension of ANOVA that allows for the comparison of multivariate sample means.

Example:

A researcher might use MANOVA to study the impact of different teaching methods on students’ scores in math and science simultaneously.

5. Canonical Correlation Analysis (CCA)

Canonical Correlation Analysis (CCA) examines the relationships between two sets of variables.

Example:

CCA can be used to study the relationship between a set of psychological variables (e.g., depression, anxiety) and a set of physical health variables (e.g., blood pressure, cholesterol).

6. Discriminant Analysis

Discriminant Analysis is used to classify a set of observations into predefined classes.

Example:

In finance, discriminant analysis can be used to predict whether a company will go bankrupt based on financial ratios.

Step-by-Step Example: Principal Component Analysis (PCA)

Let’s dive deeper into PCA with a step-by-step example.

Data Preparation

Consider a dataset with the following student scores in four subjects:

| Student | Math | Science | English | History |

|---|---|---|---|---|

| A | 90 | 85 | 78 | 92 |

| B | 60 | 65 | 70 | 75 |

| C | 88 | 92 | 80 | 85 |

| D | 55 | 60 | 65 | 58 |

| E | 75 | 80 | 72 | 77 |

Step 1: Standardize the Data

Standardization is crucial because PCA is affected by the scales of the variables. We transform the data to have a mean of 0 and a standard deviation of 1.

Step 2: Compute the Covariance Matrix

The covariance matrix captures the variance and covariance between variables.

Step 3: Compute the Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors help in understanding the direction and magnitude of the variance in the data.

Step 4: Compute Principal Components

Principal components are the directions in which the data varies the most. They are computed as linear combinations of the original variables.

Step 5: Interpret the Results

The principal components can be interpreted to understand the data’s structure. For instance, the first principal component might represent a combination of Math and Science scores, indicating a general academic performance factor.

Conclusion

Multivariate statistics is a powerful field that allows researchers and analysts to delve into complex datasets, uncovering relationships and structures that are not evident when looking at variables in isolation. By using techniques like PCA, Factor Analysis, Cluster Analysis, MANOVA, CCA, and Discriminant Analysis, one can gain deeper insights and make more informed decisions based on the data.