The Role of Activation Functions in Neural Networks

In the intricate world of deep learning, activation functions play an essential role, transforming neural networks from simple linear models into powerful, non-linear ones. This transformation enables the network to learn and represent complex data patterns, making activation functions the unsung heroes behind successful neural networks.

What is an Activation Function?

An activation function is a mathematical function applied to the output of each neuron in a neural network layer. After a neuron processes an input, it generates an output that’s passed through an activation function before moving to the next layer or as final output. This process introduces non-linearity into the network, allowing it to model complex patterns.

In other words, activation functions decide if a neuron’s output should be activated (passed on) or not based on its strength, adding depth to the data processed by the network.

Why Are Activation Functions Needed?

Without activation functions, neural networks would essentially be linear models. A stack of layers without activation functions would compute only linear mappings, regardless of the depth of the network. This limitation restricts the network’s ability to solve complex tasks like image recognition, language processing, or other high-dimensional tasks. By introducing non-linear transformations, activation functions enable neural networks to:

- Learn intricate patterns from data, even those with non-linear relationships.

- Make decisions that are closer to real-world phenomena, where relationships are often non-linear.

- Adapt to different tasks, like classification, regression, image recognition, and more, by selecting the appropriate activation function.

Types of Activation Functions

Each activation function has its own characteristics and is suited for specific tasks or network architectures. Let’s look at some popular activation functions:

1. Sigmoid Function

The sigmoid function compresses input values to a range between 0 and 1, making it ideal for binary classification tasks. It’s defined as:

- Advantages: Smooth gradient; output range is bounded, useful for probabilities.

- Drawbacks: Vanishing gradient issue for large positive or negative values, which hampers learning in deep networks.

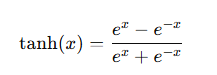

2. Hyperbolic Tangent (Tanh) Function

The Tanh function scales inputs between -1 and 1, and it’s often used in hidden layers of a neural network.

- Advantages: Zero-centered outputs help in faster convergence.

- Drawbacks: Like the sigmoid, it suffers from the vanishing gradient problem.

3. Rectified Linear Unit (ReLU) Function

The ReLU function is one of the most widely used activation functions due to its simplicity and efficiency. Defined as:

ReLU(x)=max(0,x)\text{ReLU}(x) = \max(0, x)ReLU(x)=max(0,x)

- Advantages: Computationally efficient; helps mitigate the vanishing gradient problem.

- Drawbacks: Dead neuron problem (when neurons always output zero for all inputs).

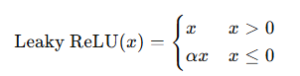

4. Leaky ReLU

To address the dead neuron problem, the Leaky ReLU introduces a small slope for negative inputs:

- Advantages: Avoids dead neurons by allowing a small gradient when x≤0x \leq 0x≤0.

- Drawbacks: Higher computational cost than ReLU.

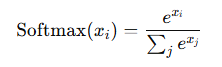

5. Softmax

Softmax is commonly used in the output layer for multi-class classification tasks. It converts the output into a probability distribution over classes.

- Advantages: Ideal for multi-class problems, ensures outputs add up to 1.

- Drawbacks: Can lead to saturation, especially when class differences are stark.

How Activation Functions Influence Learning

Activation functions determine how quickly and accurately a neural network can learn patterns. By introducing non-linearity, they help the network:

- Model complex patterns in data.

- Control gradient flow during backpropagation. For instance, ReLU helps maintain gradients, while sigmoid and tanh can lead to vanishing gradients.

- Facilitate convergence by selecting the function that complements the network’s architecture and task.

The choice of activation function can impact how fast a network learns, how well it generalizes, and how efficiently it reaches an optimal solution. Here’s a breakdown of where they’re commonly used:

- Sigmoid and Tanh: Traditionally in older architectures or specific applications like binary classification.

- ReLU: Hidden layers in CNNs and dense networks for faster training.

- Softmax: Output layers for multi-class classification.

Selecting the Right Activation Function

Choosing the right activation function requires understanding the problem type and network structure:

- Binary Classification: Sigmoid or softmax (for binary probabilities).

- Multi-Class Classification: Softmax, as it converts the output into a probability distribution.

- Image or Signal Processing: ReLU or its variants, such as Leaky ReLU, for faster convergence and efficiency.

Visualizing Activation Function Impacts

Consider a scenario where a neural network learns to classify images of animals. Without an activation function, it would struggle to separate dog images from cat images due to limited decision-making ability. However, with ReLU in hidden layers and softmax in the output, the network can learn complex, non-linear decision boundaries to accurately classify the images.

Key Takeaways

- Activation functions introduce non-linearity, essential for complex tasks.

- They control information flow through layers, directly impacting learning efficiency.

- Selecting the right function can prevent issues like vanishing gradients, dead neurons, and slow convergence.

Conclusion

Activation functions are fundamental components in neural networks, enabling them to learn and generalize complex relationships in data. Understanding the strengths and limitations of each activation function allows data scientists and engineers to build more effective and efficient models, capable of tackling challenging tasks in fields like image recognition, language processing, and beyond.

For any neural network, selecting an activation function is akin to choosing a lens for a camera—it shapes how the network views and processes information. With the right choice, a neural network can achieve incredible performance and accuracy, unlocking new possibilities in AI and deep learning.

The primary purpose of an activation function is to introduce non-linearity into a neural network. This non-linearity enables the network to learn and model complex patterns in data, as purely linear transformations would limit the network to only linear relationships. Activation functions also help control the flow of information between neurons, determining which neurons should be activated based on the input strength.

The choice of activation function directly impacts how effectively and efficiently a neural network learns. For example, ReLU (Rectified Linear Unit) is widely used in hidden layers as it helps mitigate the vanishing gradient problem, allowing faster and more stable training. Sigmoid and Tanh functions are often used in output layers for classification tasks due to their bounded outputs. Choosing the right activation function can prevent issues like slow convergence, dead neurons, or gradient problems, ultimately improving the model’s performance.

The vanishing gradient problem occurs when gradients become very small during backpropagation, slowing down learning and sometimes halting it altogether. Sigmoid and Tanh activation functions are prone to this issue, as their gradients diminish significantly for large positive or negative inputs. This problem often makes learning in deep networks challenging. To address it, functions like ReLU and its variants (Leaky ReLU) are used since they maintain gradients more effectively.

ReLU is preferred in hidden layers because it is computationally efficient and avoids the vanishing gradient problem, which is common with Sigmoid and Tanh functions. ReLU outputs a zero gradient for negative inputs, which speeds up training and reduces computation time. Its simple yet effective behavior of only passing positive values helps deep networks learn faster and converge more reliably, making it ideal for hidden layers in deep learning models.

Softmax is typically used in the output layer of a neural network for multi-class classification tasks. It converts raw output scores into probabilities that sum up to 1, providing a clear prediction for each class. This makes Softmax especially useful for tasks where the model needs to assign probabilities to multiple classes, such as image classification. Its output enables the network to not only choose a class but also provide the confidence level for each class.